Like many, I’m genuinely excited about the possibilities of AI and look forward to integrating it to help us move forward—personally, at work, and across the organizations we serve. Yet, alongside this optimism, there’s a paradox we can’t ignore. The theory of progression and regression reminds us that advances in one area can unintentionally erode human skills in another. The Law of Unintended Consequences shows that even well-intentioned technology can produce unexpected side effects. Skill Atrophy warns that abilities we stop practicing begin to decay. And the Paradox of Automation highlights that as machines take on more tasks, human expertise can decline precisely when it’s most needed. True progress, then, requires integrating AI thoughtfully while preserving and cultivating human judgment, intuition, and critical thinking.

The Theory of Progression and Regression

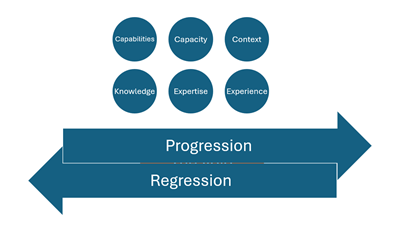

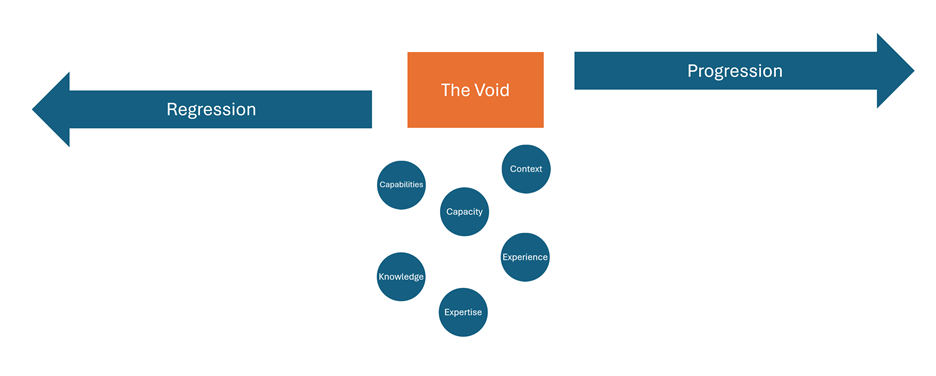

The theory of Progression and Regression posits that advancements in one domain often come at the cost of decline in another. In the context of business and technology, this manifests as the paradoxical relationship between automation and human knowledge. As we automate processes and rely increasingly on AI-driven decision-making, we risk creating what can be termed “The Void” – a dangerous gap in human capital, organizational knowledge, and contextual understanding. (See Figures 1 & 2)

Automation and the Regression of Human Skills

The rise of automation and AI has undoubtedly brought tremendous benefits to businesses. It has increased efficiency, reduced errors, and allowed for the processing of vast amounts of data at unprecedented speeds. However, this progression in technological capability is often accompanied by a regression in human skills and knowledge.

Consider the following examples:

- Financial Analysis: As AI systems become more adept at analyzing market trends and making investment recommendations, human financial analysts may lose the skills to perform deep, intuitive market analysis.

- Manufacturing: With the advent of fully automated production lines, workers may lose the hands-on skills and deep understanding of manufacturing processes that were once passed down through generations.

- Customer Service: As chatbots and AI assistants handle an increasing number of customer interactions, human representatives may lose the nuanced skills of problem-solving and empathetic communication.

- Decision-Making & Critical Thinking: As AI systems provide data-driven recommendations and predictive insights, humans may increasingly defer to algorithms rather than analyzing complex situations themselves. Over time, the ability to weigh competing variables, recognize biases, and make nuanced judgments can erode, leaving organizations dependent on technology while diminishing the workforce’s critical thinking skills.

In each of these cases, the information is still there – perhaps more abundant than ever – but the learning, the internalization of knowledge, and the development of intuitive understanding by humans is regressing.

The Void: A Looming Threat to Organizational Knowledge

“The Void” represents the dangerous space between automated systems and human understanding. It’s a gap where critical organizational knowledge, context, and intuition can be lost. This void poses several risks to businesses:

- Loss of Contextual Understanding: While AI can process vast amounts of data, it often lacks the contextual understanding that comes from years of human experience. This can lead to decisions that look good on paper but fail to account for nuanced real-world factors.

- Erosion of Institutional Memory: As older employees retire and their roles are increasingly automated, organizations risk losing valuable institutional memory – the unwritten knowledge of “how things work” that often proves crucial in times of crisis or rapid change.

- Decreased Innovation Capacity: Innovation often springs from a deep understanding of a field, combined with the ability to think creatively and draw unexpected connections. As human knowledge regresses, this capacity for groundbreaking innovation may diminish.

- Vulnerability to System Failures: Over-reliance on automated systems can leave organizations vulnerable when these systems fail. If human knowledge has regressed to the point where employees can’t step in during system outages, the consequences can be severe.

- Reduced Adaptability: In rapidly changing environments, the ability to adapt quickly is crucial. Human intuition and experience-based decision-making often excel in novel situations where AI, limited by its training data, may falter.

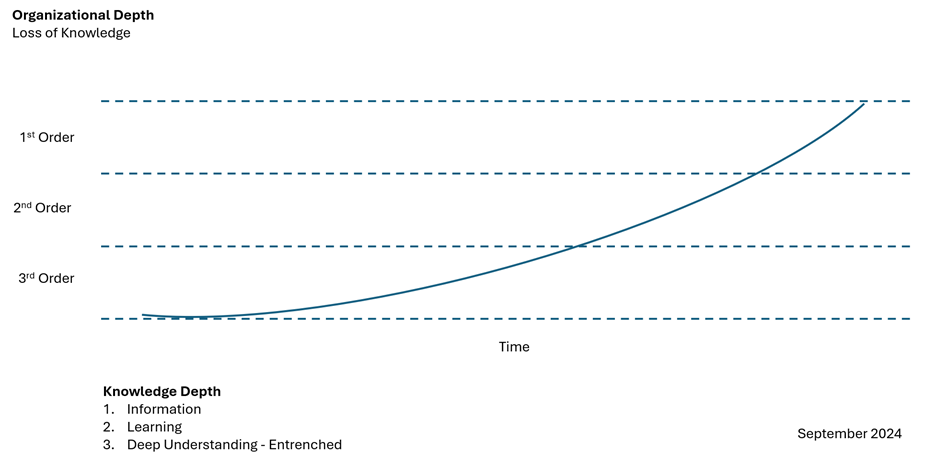

(See Figure 3)

Managing the Void: Strategies for Organizations

To prevent the creation of The Void and manage the delicate balance between technological progression and human knowledge regression, organizations must take proactive steps:

- Continuous Learning Programs: Implement robust, ongoing training programs that not only teach employees how to use new technologies but also ensure they understand the underlying principles and can operate without them if necessary.

- Knowledge Transfer Initiatives: Create structured programs for knowledge transfer from experienced employees to newer ones, ensuring that valuable institutional knowledge isn’t lost as roles become automated.

- Balanced Automation: Instead of fully automating processes, consider partial automation that keeps humans in the loop, allowing them to maintain their skills while benefiting from technological assistance.

- Cross-functional Teams: Encourage the formation of diverse teams that bring together technological expertise with deep domain knowledge, fostering an environment where AI and human insights can complement each other.

- Scenario Planning and Simulations: Regularly conduct exercises that require employees to solve problems without relying on automated systems, keeping their skills sharp and highlighting areas where human knowledge may be regressing.

- Cultural Shift: Foster a culture that values both technological progression and human expertise. Recognize and reward employees not just for efficiently using automated systems, but for demonstrating deep understanding and innovative thinking.

- Ethics and Governance Frameworks: Develop clear guidelines for the use of AI and automated systems, ensuring that the pursuit of efficiency doesn’t come at the cost of ethical considerations or long-term organizational resilience.

Conclusion: Bridging the Void

The theory of progression and regression reminds us that advancement is not a simple, linear process. As we press forward with automation and AI, we must be mindful of what might be regressing and take active steps to preserve crucial human knowledge and skills.

The key lies not in resisting technological progress, but in finding ways to advance that don’t create a void in human capabilities. By thoughtfully managing the balance between automation and human knowledge, organizations can harness the power of technology while maintaining the irreplaceable value of human insight, creativity, and adaptability.

In navigating this paradox of progress, we have the opportunity to create a future where technological advancement and human development go hand in hand, each enhancing the other. It’s in this balanced approach that we’ll find the true path to sustainable, resilient progress in the age of automation.

Figure 1 – Current State

In the current state, the organization fully leverages its capabilities, capacity, context, knowledge, expertise, and experience to operate effectively within its environment. Human judgment and institutional understanding are intact, allowing the organization to navigate complexity, make informed decisions, and adapt to changing conditions with confidence.

Figure 2 – Highly Automated State – Future

In a highly automated future state, the organization risks losing some or all of these critical human assets. As processes and decision-making shift to machines, gaps appear in knowledge, expertise, and experiential understanding. This “void” creates potential blind spots, reduces adaptability, and diminishes the organization’s ability to exercise judgment, intuition, and nuanced problem-solving.

Figure 3 – Erosion of Organizational Knowledge

Over time, organizational knowledge naturally erodes, moving from third-order knowledge—deep, entrenched understanding—to second-order knowledge, where insights are still learned but less embedded, and ultimately to first-order knowledge, which is little more than disconnected information. This regression is accelerated by high levels of automation, underutilization of skilled personnel, and the departure of key individuals, leading to a loss of critical context, experience, and the nuanced judgment that once guided decision-making.

Want to Learn More?

Discover how to integrate AI while preserving human expertise successfully. Connect with:

Michael Moorhouse

Moorhouse+Group, LLC

Click Here to Contact Us